Grouping Data in Python

DataFrames are great tools for exploring data about individual observations, or rows, of data in a dataset. However, we often want to know about statistics on an entire category of data!

For example, the Illini Football Dataset contains all football games played by Illinois since 1892. When sorted by the Opponent you can see that we have played many teams more than once (in the full dataset, we've played some teams over 70 times!):

Season Date Location Opponent OpponentRank Result IlliniScore OpponentScore Note 483 1979 9/22/1979 @ Air Force NaN W 27.0 19.0 NaN 473 1980 9/27/1980 vs. Air Force NaN T 20.0 20.0 NaN 9 2019 8/31/2019 vs. Akron NaN W 42.0 3.0 NaN 288 1996 9/21/1996 vs. Akron NaN W 38.0 7.0 NaN 458 1982 12/29/1982 Memphis, TN Alabama NaN L 15.0 21.0 Liberty Bowl 1145 1903 9/30/1903 vs. American Osteopath NaN W 36.0 0.0 NaN 1159 1902 10/1/1902 vs. American Osteopath NaN W 22.0 0.0 NaN 287 1996 9/14/1996 @ Arizona NaN L 0.0 41.0 NaN 355 1990 9/8/1990 @ Arizona NaN L 16.0 28.0 NaN 320 1993 9/18/1993 vs. Arizona NaN L 14.0 16.0 NaN 298 1995 9/16/1995 vs. Arizona NaN W 9.0 7.0 NaN 391 1987 9/12/1987 vs. Arizona State NaN L 7.0 21.0 NaN 96 2012 9/8/2012 @ Arizona State NaN L 14.0 45.0 NaN 379 1988 9/10/1988 @ Arizona State NaN L 16.0 21.0 NaN 109 2011 9/17/2011 vs. Arizona State NaN W 17.0 14.0 NaN 107 2011 9/3/2011 vs. Arkansas State NaN W 33.0 15.0 NaN 218 2002 9/14/2002 vs. Arkansas State NaN W 59.0 7.0 NaN 251 1999 9/4/1999 vs. Arkansas State NaN W 41.0 3.0 NaN 423 1985 12/31/1985 Atlanta, GA Army NaN L 29.0 31.0 Peach Bowl 913 1934 11/3/1934 vs. Army NaN W 7.0 0.0 NaN

The first twenty rows of the "Illini Football Dataset" when sorted by Opponent.

When we group data in a DataFrame, we are exploring data about all rows with similar data in one or more columns. Several questions we can ask about the Illini Football dataset includes:

- What was the average number of points scored by the Illini score against each opponent they played? Ex: How many points did the Illini score verses Purdue? ...verses Arizona? ...verses Nebraska? (We are grouping on different values in the

Opponentcolumn.) - What is the standard deviation of points scored by the Illini in home games vs. away games? (The grouping on different values in the

Locationcolumn, wherevs.are home games and@are away games.)

Using df.groupby to Create Groups

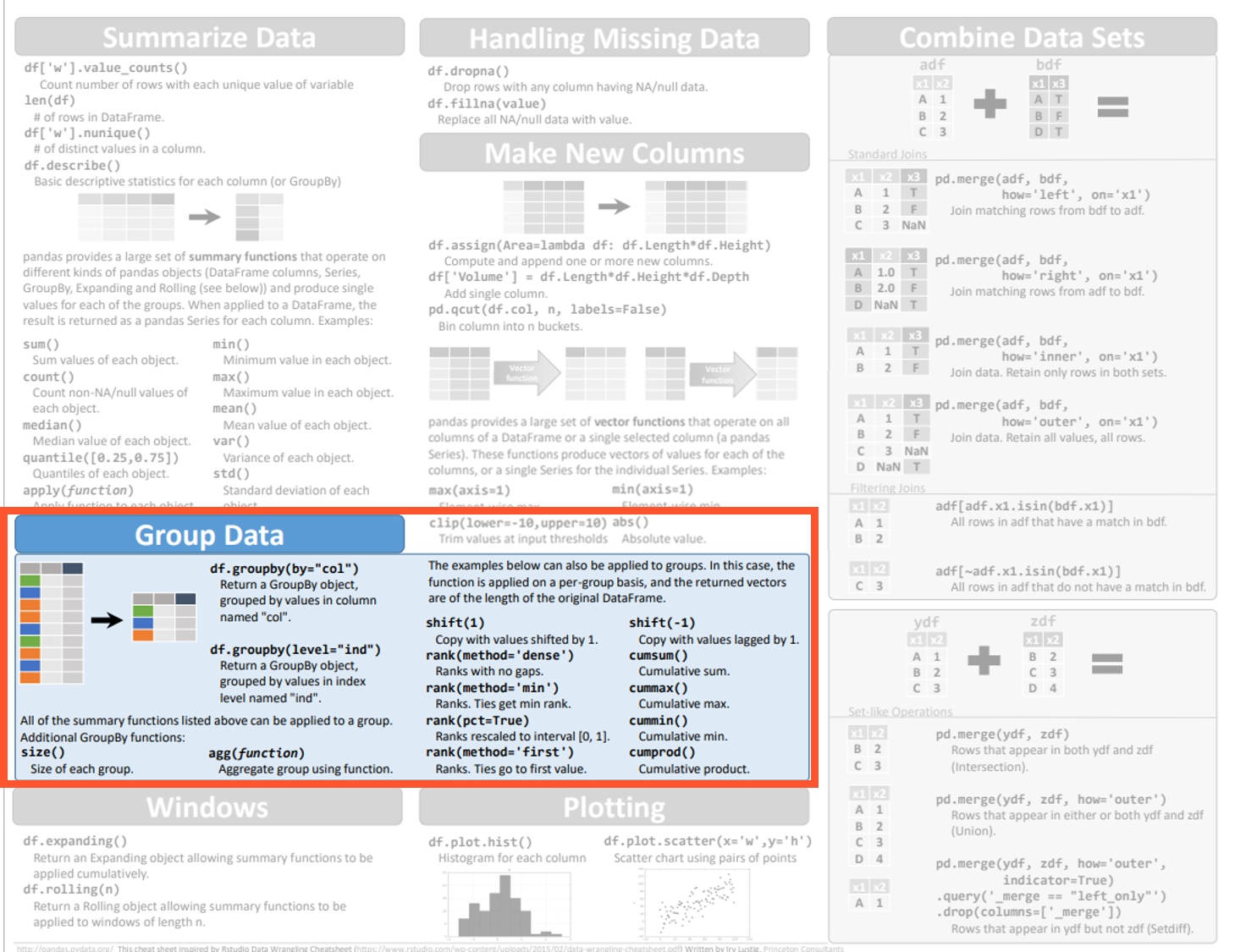

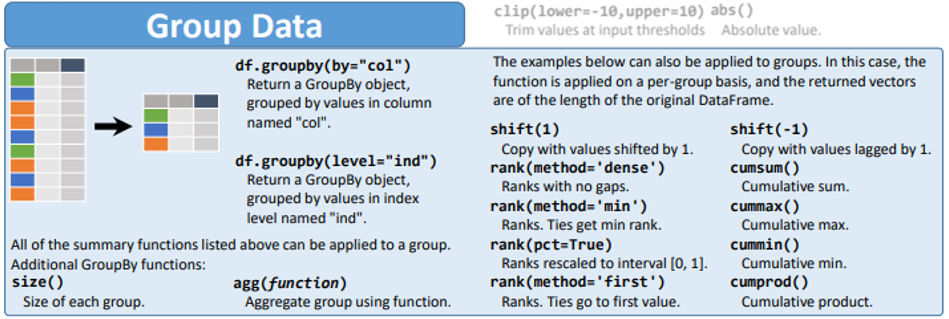

The pandas cheat sheet provides us an overview of the "Group Data" in Python:

The syntax of groupby requires us to provide one or more columns to create groups of data. For example, if we group by only the Opponent column, the following command creates groups based on the unique values in the Opponent column:

Commonly, the by= argument name is excluded since it is not required for simple groups:

Internally pandas is creating many groups, where each group contains ALL rows that have the same value in Opponent. Looking at the twenty rows from the Illini Football Dataset again, I can highlight the different groups to show what pandas is doing:

| Season | Date | Location | Opponent | OpponentRank | Result | IlliniScore | OpponentScore | Note | |

|---|---|---|---|---|---|---|---|---|---|

Group where Opponent == "Air Force" (2 rows) | |||||||||

| 483 | 1979 | 9/22/1979 | @ | Air Force | NaN | W | 27.0 | 19.0 | NaN |

| 473 | 1980 | 9/27/1980 | vs. | Air Force | NaN | T | 20.0 | 20.0 | NaN |

Group where Opponent == "Akron" (2 rows) | |||||||||

| 9 | 2019 | 8/31/2019 | vs. | Akron | NaN | W | 42.0 | 3.0 | NaN |

| 288 | 1996 | 9/21/1996 | vs. | Akron | NaN | W | 38.0 | 7.0 | NaN |

Group where Opponent == "Alabama" (1 row) | |||||||||

| 458 | 1982 | 12/29/1982 | Memphis, TN | Alabama | NaN | L | 15.0 | 21.0 | Liberty Bowl |

Group where Opponent == "American Osteopath" (2 rows) | |||||||||

| 1145 | 1903 | 9/30/1903 | vs. | American Osteopath | NaN | W | 36.0 | 0.0 | NaN |

| 1159 | 1902 | 10/1/1902 | vs. | American Osteopath | NaN | W | 22.0 | 0.0 | NaN |

Group where Opponent == "Arizona" (4 rows) | |||||||||

| 287 | 1996 | 9/14/1996 | @ | Arizona | NaN | L | 0.0 | 41.0 | NaN |

| 355 | 1990 | 9/8/1990 | @ | Arizona | NaN | L | 16.0 | 28.0 | NaN |

| 320 | 1993 | 9/18/1993 | vs. | Arizona | NaN | L | 14.0 | 16.0 | NaN |

| 298 | 1995 | 9/16/1995 | vs. | Arizona | NaN | W | 9.0 | 7.0 | NaN |

Group where Opponent == "Arizona State" (4 rows) | |||||||||

| 391 | 1987 | 9/12/1987 | vs. | Arizona State | NaN | L | 7.0 | 21.0 | NaN |

| 96 | 2012 | 9/8/2012 | @ | Arizona State | NaN | L | 14.0 | 45.0 | NaN |

| 379 | 1988 | 9/10/1988 | @ | Arizona State | NaN | L | 16.0 | 21.0 | NaN |

| 109 | 2011 | 9/17/2011 | vs. | Arizona State | NaN | W | 17.0 | 14.0 | NaN |

Group where Opponent == "Arkansas State" (3 rows) | |||||||||

| 107 | 2011 | 9/3/2011 | vs. | Arkansas State | NaN | W | 33.0 | 15.0 | NaN |

| 218 | 2002 | 9/14/2002 | vs. | Arkansas State | NaN | W | 59.0 | 7.0 | NaN |

| 251 | 1999 | 9/4/1999 | vs. | Arkansas State | NaN | W | 41.0 | 3.0 | NaN |

Group where Opponent == "Army" (2 rows) | |||||||||

| 423 | 1985 | 12/31/1985 | Atlanta, GA | Army | NaN | L | 29.0 | 31.0 | Peach Bowl |

| 913 | 1934 | 11/3/1934 | vs. | Army | NaN | W | 7.0 | 0.0 | NaN |

The first twenty rows of the "Illini Football Dataset" when sorted by Opponent.

Working with Group Data

Unfortunately, a group is complex and hard to visually represent so pandas will not provide any visual output when you create a group. Instead, we will either:

- Use the group to explore summary statistics about each group (

group.describe()), or - Aggregate all of the groups using a aggravation function (

group.agg(...)).

We'll explore them both!

Viewing Summary Statistics of Group Data

When we create a group data in Python, we will commonly refer to the variable that contains the grouped data as group.

On a group, group.describe() provides summary statistics of all numeric columns of data for each group. Using the same DataFrame with the Illini Football Data grouped by Opponent, we see the summary statistic of every group:

Season OpponentRank IlliniScore OpponentScore count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max Opponent Air Force 2.0 1979.500000 0.707107 1979.0 1979.25 1979.5 1979.75 1980.0 0.0 NaN NaN NaN NaN NaN NaN NaN 2.0 23.500000 4.949747 20.0 21.75 23.5 25.25 27.0 2.0 19.500000 0.707107 19.0 19.25 19.5 19.75 20.0 Akron 2.0 2007.500000 16.263456 1996.0 2001.75 2007.5 2013.25 2019.0 0.0 NaN NaN NaN NaN NaN NaN NaN 2.0 40.000000 2.828427 38.0 39.00 40.0 41.00 42.0 2.0 5.000000 2.828427 3.0 4.00 5.0 6.00 7.0 Alabama 1.0 1982.000000 NaN 1982.0 1982.00 1982.0 1982.00 1982.0 0.0 NaN NaN NaN NaN NaN NaN NaN 1.0 15.000000 NaN 15.0 15.00 15.0 15.00 15.0 1.0 21.000000 NaN 21.0 21.00 21.0 21.00 21.0 American Osteopath 2.0 1902.500000 0.707107 1902.0 1902.25 1902.5 1902.75 1903.0 0.0 NaN NaN NaN NaN NaN NaN NaN 2.0 29.000000 9.899495 22.0 25.50 29.0 32.50 36.0 2.0 0.000000 0.000000 0.0 0.00 0.0 0.00 0.0 Arizona 4.0 1993.500000 2.645751 1990.0 1992.25 1994.0 1995.25 1996.0 0.0 NaN NaN NaN NaN NaN NaN NaN 4.0 9.750000 7.135592 0.0 6.75 11.5 14.50 16.0 4.0 23.000000 14.764823 7.0 13.75 22.0 31.25 41.0 ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... Western Illinois 3.0 2013.333333 5.686241 2007.0 2011.00 2015.0 2016.50 2018.0 0.0 NaN NaN NaN NaN NaN NaN NaN 3.0 33.000000 11.532563 21.0 27.50 34.0 39.00 44.0 3.0 4.666667 8.082904 0.0 0.00 0.0 7.00 14.0 Western Kentucky 2.0 2015.500000 2.121320 2014.0 2014.75 2015.5 2016.25 2017.0 0.0 NaN NaN NaN NaN NaN NaN NaN 2.0 31.000000 15.556349 20.0 25.50 31.0 36.50 42.0 2.0 20.500000 19.091883 7.0 13.75 20.5 27.25 34.0 Western Michigan 6.0 1999.666667 26.112577 1947.0 2005.00 2009.5 2011.75 2016.0 0.0 NaN NaN NaN NaN NaN NaN NaN 6.0 27.333333 17.385818 10.0 18.50 23.5 28.50 60.0 6.0 20.833333 9.537645 7.0 15.50 21.5 26.00 34.0 Wisconsin 87.0 1966.758621 34.164774 1895.0 1944.00 1969.0 1994.50 2020.0 1.0 14.0 NaN 14.0 14.0 14.0 14.0 14.0 87.0 16.563218 11.407567 0.0 7.00 14.0 24.00 51.0 87.0 19.540230 14.123874 0.0 7.00 20.0 27.50 56.0 Youngstown State 1.0 2014.000000 NaN 2014.0 2014.00 2014.0 2014.00 2014.0 0.0 NaN NaN NaN NaN NaN NaN NaN 1.0 28.000000 NaN 28.0 28.00 28.0 28.00 28.0 1.0 17.000000 NaN 17.0 17.00 17.0 17.00 17.0

The output of df.groupby("Opponent").describe().

A few observations:

- The rows are now labeled with the Opponent. The rows will always be based on what we grouped our data by in the

groupbycommand. - The columns are all of the numeric categories in the dataset (

Season,OpponentRank,IlliniScore, andOpponentScore). - The sub-columns are various summary statistics (

countis the number of total rows included in the group;meanis the average of the values of those rows;minis the minimum value,50%is the median value, etc).

Aggregating Groups in Grouped Data

In many cases, we want to continue the analysis with a summary statistic of group data. On a group, group.agg(...) provides the ability to aggregate all rows within a group into a single value. There are hundreds of available functions, but a few are very common:

df_count = group.agg("count")will count the number of rows in each group. This does not look at the data within the rows at all, it only counts how many rows are each group! For example, the Illini has played Akron only twice so the count is 2.df_mean = group.agg("mean")will find the mean (average) of each numeric column for each group. For example, the two Akron games the Illini scored 42 and 38. Therefore, the mean is 40.df_sum = group.agg("sum")will find the sum of each numeric column for each group. The sum of all the points the Illini scored against Akron is 80.Other strings that can be used include:

"std"for each group's standard deviation,"median"for each group's median value,"var"for variance, and many more.

To make working with the DataFrame as simple as possible, we will ALWAYS use .reset_index() after .agg(...).

Example: Average Points Scored in Home vs. Away Games

I have always heard that a sports team has a "home team advantage", where they will preform better at home than away. Let's see if the Illini, on average, has scored more points at home or away?

Exploring the dataset, the Location column indicates if the game is played at home (vs.), at the opponent's stadium (@), or at a neutral site (ex: Memphis, TN):

| Season | Date | Location | Opponent | OpponentRank | Result | IlliniScore | OpponentScore | Note | |

|---|---|---|---|---|---|---|---|---|---|

| 483 | 1979 | 9/22/1979 | @ | Air Force | NaN | W | 27.0 | 19.0 | NaN |

| 473 | 1980 | 9/27/1980 | vs. | Air Force | NaN | T | 20.0 | 20.0 | NaN |

| 9 | 2019 | 8/31/2019 | vs. | Akron | NaN | W | 42.0 | 3.0 | NaN |

| 288 | 1996 | 9/21/1996 | vs. | Akron | NaN | W | 38.0 | 7.0 | NaN |

| 458 | 1982 | 12/29/1982 | Memphis, TN | Alabama | NaN | L | 15.0 | 21.0 | Liberty Bowl |

| 1145 | 1903 | 9/30/1903 | vs. | American Osteopath | NaN | W | 36.0 | 0.0 | NaN |

| 1159 | 1902 | 10/1/1902 | vs. | American Osteopath | NaN | W | 22.0 | 0.0 | NaN |

| 287 | 1996 | 9/14/1996 | @ | Arizona | NaN | L | 0.0 | 41.0 | NaN |

| 355 | 1990 | 9/8/1990 | @ | Arizona | NaN | L | 16.0 | 28.0 | NaN |

| 320 | 1993 | 9/18/1993 | vs. | Arizona | NaN | L | 14.0 | 16.0 | NaN |

| 298 | 1995 | 9/16/1995 | vs. | Arizona | NaN | W | 9.0 | 7.0 | NaN |

| 391 | 1987 | 9/12/1987 | vs. | Arizona State | NaN | L | 7.0 | 21.0 | NaN |

| 96 | 2012 | 9/8/2012 | @ | Arizona State | NaN | L | 14.0 | 45.0 | NaN |

| 379 | 1988 | 9/10/1988 | @ | Arizona State | NaN | L | 16.0 | 21.0 | NaN |

| 109 | 2011 | 9/17/2011 | vs. | Arizona State | NaN | W | 17.0 | 14.0 | NaN |

| 107 | 2011 | 9/3/2011 | vs. | Arkansas State | NaN | W | 33.0 | 15.0 | NaN |

| 218 | 2002 | 9/14/2002 | vs. | Arkansas State | NaN | W | 59.0 | 7.0 | NaN |

| 251 | 1999 | 9/4/1999 | vs. | Arkansas State | NaN | W | 41.0 | 3.0 | NaN |

| 423 | 1985 | 12/31/1985 | Atlanta, GA | Army | NaN | L | 29.0 | 31.0 | Peach Bowl |

| 913 | 1934 | 11/3/1934 | vs. | Army | NaN | W | 7.0 | 0.0 | NaN |

The first twenty rows of the "Illini Football Dataset" when sorted by Opponent.

Creating group data based rows that have the same value for Location, we use groupby

Since we know we're interested in the average points, we can aggregate our group data together using "mean". The full Python code includes a groupby, an agg, and a reset_index:

Location Season OpponentRank IlliniScore OpponentScore 0 @ 1963.700971 14.5 15.902913 20.147573 1 Atlanta, GA 1985.000000 NaN 29.000000 31.000000 2 Birmingham, AL 1988.000000 NaN 10.000000 14.000000 3 Bronx, NY 1938.500000 NaN 0.000000 6.500000 4 Chicago, IL 1963.111111 NaN 16.555556 18.444444 5 Cleveland, OH 1944.500000 NaN 10.250000 24.000000 6 Dallas, TX 2014.000000 NaN 18.000000 35.000000 7 Detroit, MI 1953.000000 NaN 11.000000 17.500000 8 El Paso, TX 1991.000000 NaN 3.000000 6.000000 9 Houston, TX 2010.000000 NaN 38.000000 14.000000 10 Indianapolis, IN 1928.333333 NaN 17.333333 2.333333 11 Memphis, TN 1988.000000 NaN 22.500000 10.500000 12 Miami, FL 1999.000000 NaN 63.000000 21.000000 13 Milwaukee, WI 1899.000000 NaN 0.000000 23.000000 14 New Orleans, LA 2001.000000 NaN 34.000000 47.000000 15 Omaha, NE 1892.000000 NaN 20.000000 0.000000 16 Orlando, FL 1989.000000 NaN 31.000000 21.000000 17 Pasadena, CA 1970.000000 NaN 25.600000 24.400000 18 Peoria, IL 1897.000000 NaN 6.000000 0.000000 19 Rock Island, IL 1899.000000 NaN 0.000000 58.000000 20 San Diego, CA 1992.000000 NaN 17.000000 27.000000 21 San Francisco, CA 2011.000000 NaN 20.000000 14.000000 22 Santa Clara, CA 2019.000000 NaN 20.000000 35.000000 23 St. Louis, MO 1969.100000 NaN 17.700000 26.000000 24 Tampa, FL 1990.000000 NaN 0.000000 30.000000 25 vs. 1959.526773 13.5 21.115942 16.021739

The average group value for all rows in the dataset, where each group is based on the value of each row's Location. (26 rows)

There are quite a few neutral sites, so let's write a conditional on df_mean to display only the home and away games:

Location Season OpponentRank IlliniScore OpponentScore 0 @ 1963.700971 14.5 15.902913 20.147573 25 vs. 1959.526773 13.5 21.115942 16.021739

Average group value for home and away games. (2 rows)

This DataFrame provides our answer! Specifically:

- When the Illini is playing games at home (when

Location == "vs."), the Illini score an average of 21.1 points. - When the Illini is playing games at the opponent's stadium (when

Location == "@"), the Illini score an average of only 15.9 points. - This is a very large difference (5.2 points) and suggests that the "home team advantage" may be a real thing!

Example Walk-Throughs with Worksheets

Video 1: Types of Variables

Video 2: Reading Histograms (Part 1)

Video 3: Reading Histograms (Part 2)

Practice Questions

Q1: Which two commands will make equivalent groups in Python?Q2: You have a DataFrame `df` holding STAT 100 midterm scores for each student and their major. How would you find the average midterm score for each major in Python?

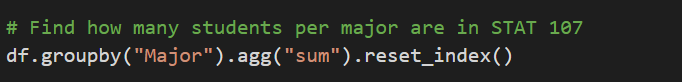

Q3: How would you fix the following line of code that is attempting to find how many students per major are in STAT 107?

Q4: You already created grouped data in Python using the .groupby() function. What function would you use to display summary statistics for all numeric columns for each group?

Q5: In order to simplify the process of working with DataFrames in Python, what function should always be used after using .agg()